Eclipse Talks

Don't Panic:

Accelerate your approach to AB testing

Ellie Hughes

Head of Consulting

In this talk we cover three frameworks which will give you the right advice and tools you need in order to setup your programme correctly and enable future success.

First - we look at how to have the right attitude to potential failure.

Next we walk through Our three strategic frameworks:

Creating a Mindset for Experimentation

Deploying an Enablement Model for Experimentation

Running and Audit of your Enablement

STEP 1: PANIC!

Start by acknowledging that you and your business are out of your depth AND accept that you are going to fail while trying to improve.

STEP 2: Take the opportunity to think of starting CRO in your organisation like a new startup which is launching, you need to think about these factors:

Marketing

Positioning

Ideal customer profile (eg product managers, marketers)

Funding..... who is going to pay for this?

STEP 3: DON'T PANIC Hire the CEO for your CRO startup - and start the shift to reducing your panic and risk levels

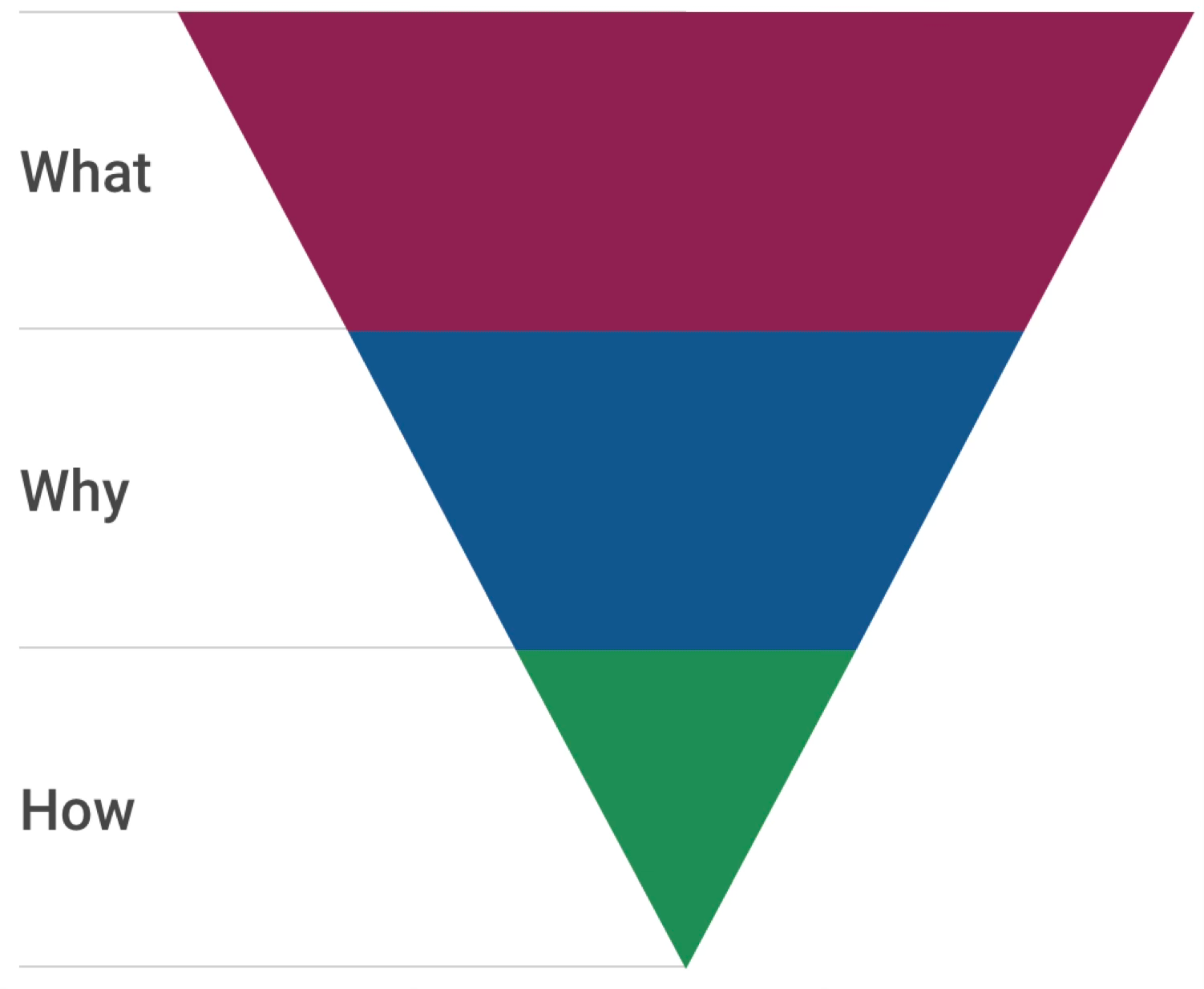

Part 1: Experimentation Mindset: The How, Why and What of Experimentation

Experiments consist of 3 key variables or decisions:

What you are testing: Changes you think will impact your KPI

Why you are testing: The impact you want to make on your KPI/metric or goal

How you will test that effectively and rigorously:

Process/Planning

Ideation

Execution

Analytics

Value

In many teams, the decision about what to test often comes first

They are employing the following strategy:

What phase: The team are doing ‘feature-led’ testing, where they decide what to test and then justify why they are testing it.

Why phase: the team look around for KPIs to support their pre-selected idea: And so increase conversion…

How: Finally, they work out how to test their feature, or whether it is even possible/feasible to run the experiment.

This happens well after choosing the experiment.

In actual fact, this hierarchy needs to be inverted

START with How:

Do we find out customer behaviour and problems from our metrics?

Do we ideate experiments?

Do we plan experiments?

How do we do experimental design?

Do we build experiments?

Do we test statistically and analytically?

Do we design the feature?

Do we know an experiment has won?

AFTER the How:

The Why: it becomes clear which KPIs can be influenced and whether these are actually important.

The What: Establishing the why leads to a growing number of much better ideas - what you'll work on.

The correct training and enablement free the team to be more autonomous and agile with their experiments.

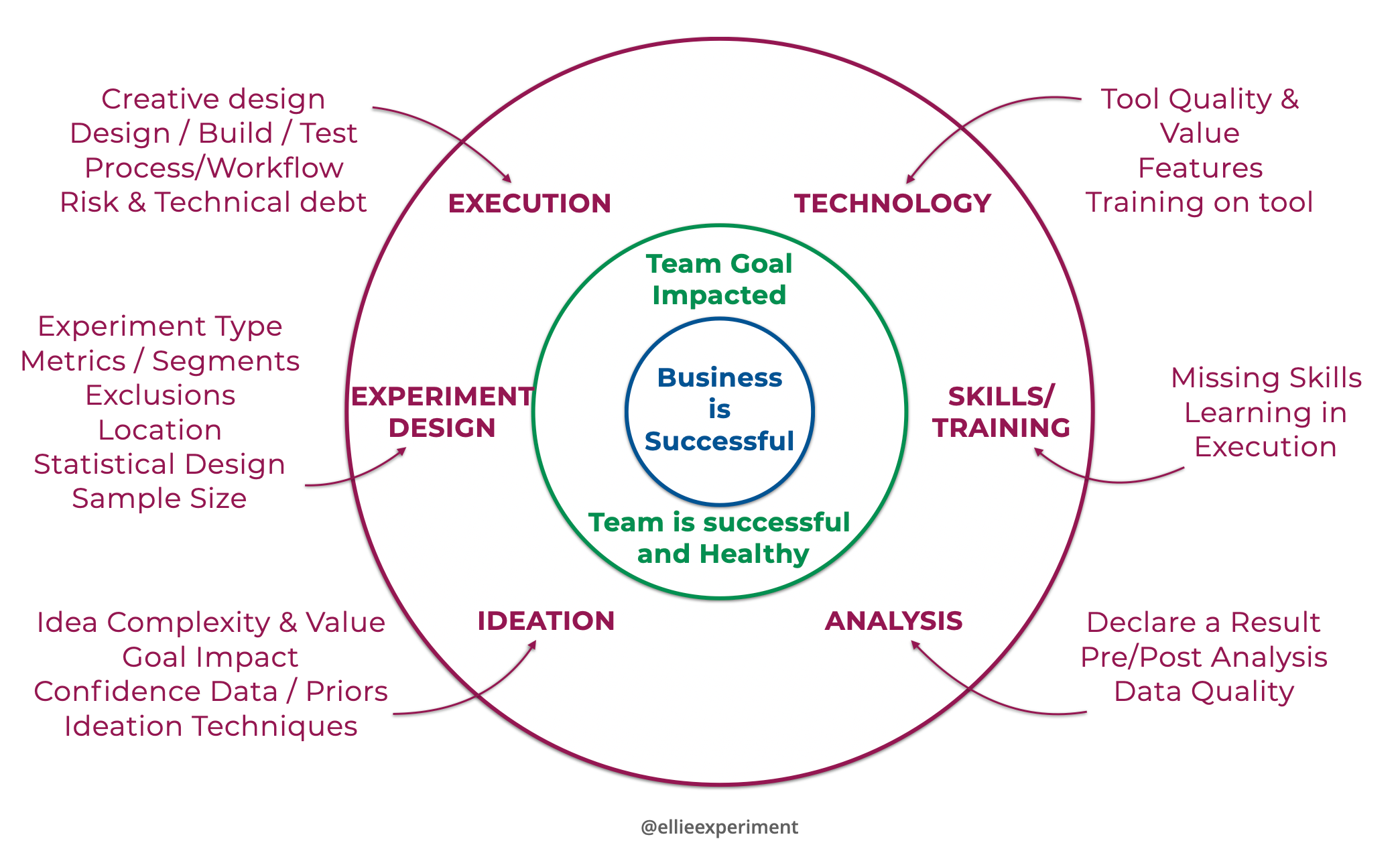

Part 2: Deploying an Enablement Model for Experimentation

Having got the right mindset, we then need to do the second half of our strategy:

Use an enablement model across the experiment lifecycle

This is designed to show how we can inject the How Why and What into our ways of working, and help us diagnose where this is going wrong.

Looking back on our understanding of How, Why What

We can see the experiment mindset maps neatly to the enablement model

Skills and Training, where I help up-skill the team,

Is triggered then the How Why What Mindset fails

But how do you diagnose that?

Part 3: What is an Enablement Audit?

How do you diagnose a failure of How, Why, What?

Lack of Impact on goal over time or over many experiments

Poor team health

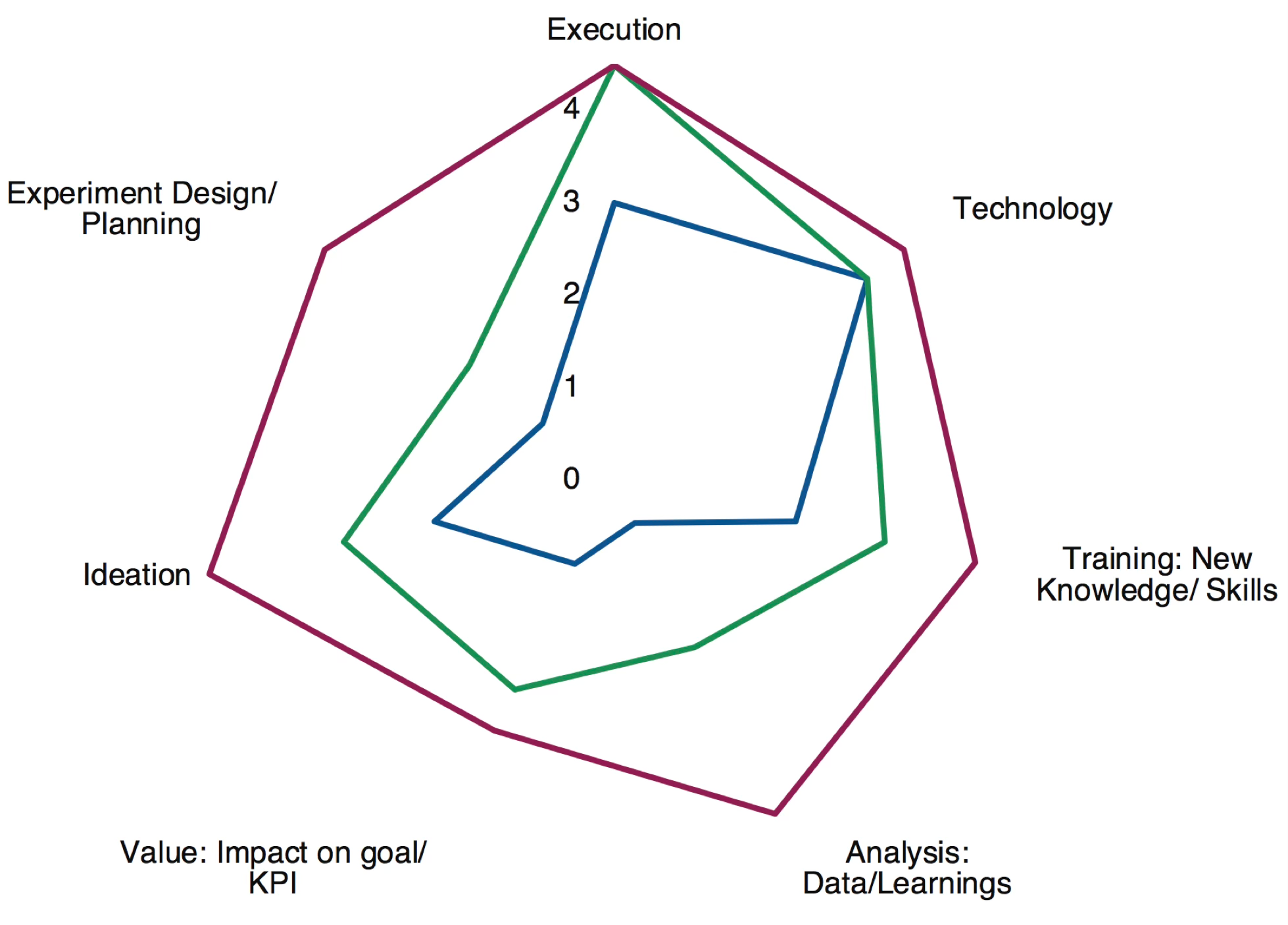

Using an Enablement Audit

An enablement audit looks back at what happened during the experiment, and what the team did and how well they did it.

Make it quantitative!

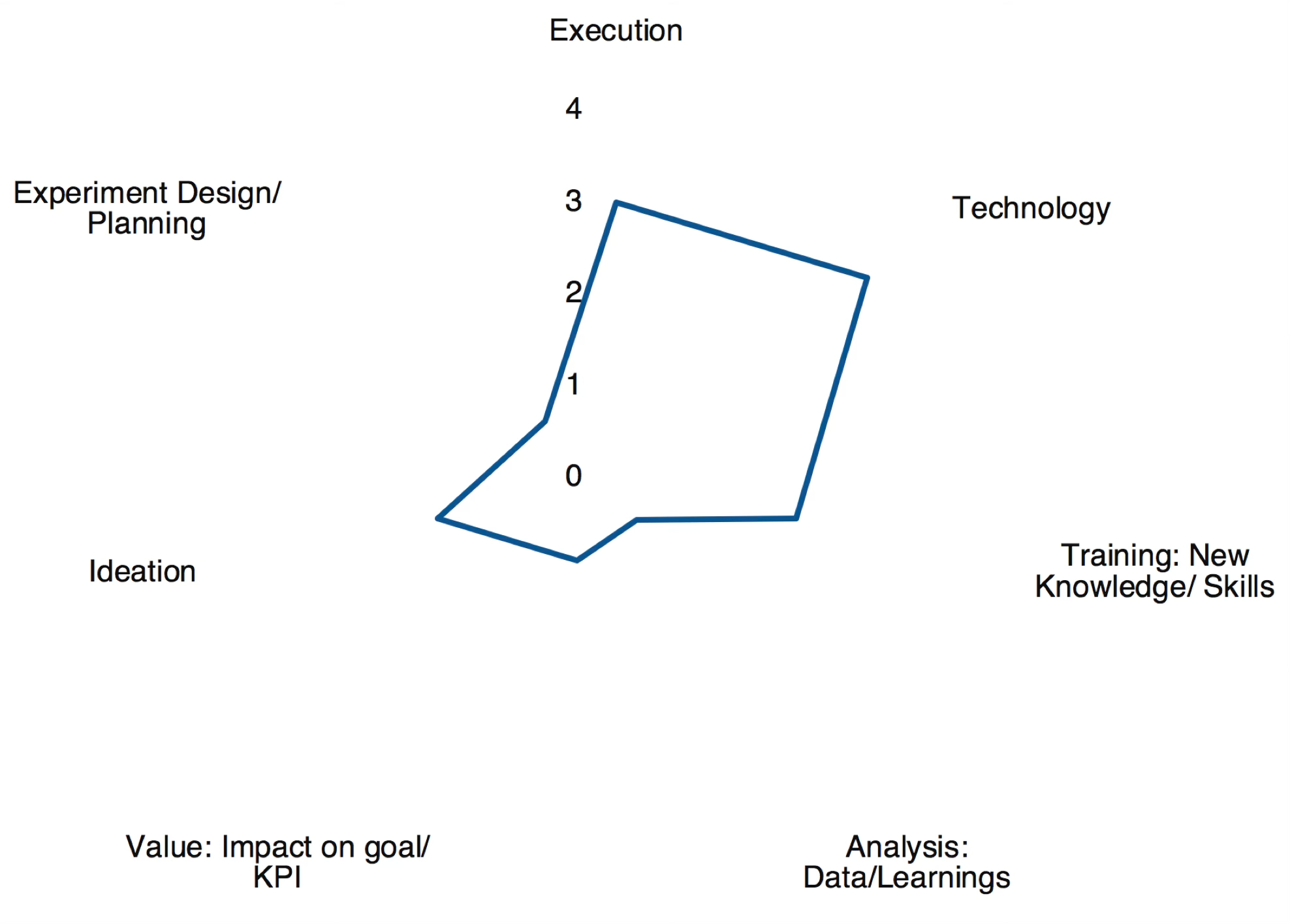

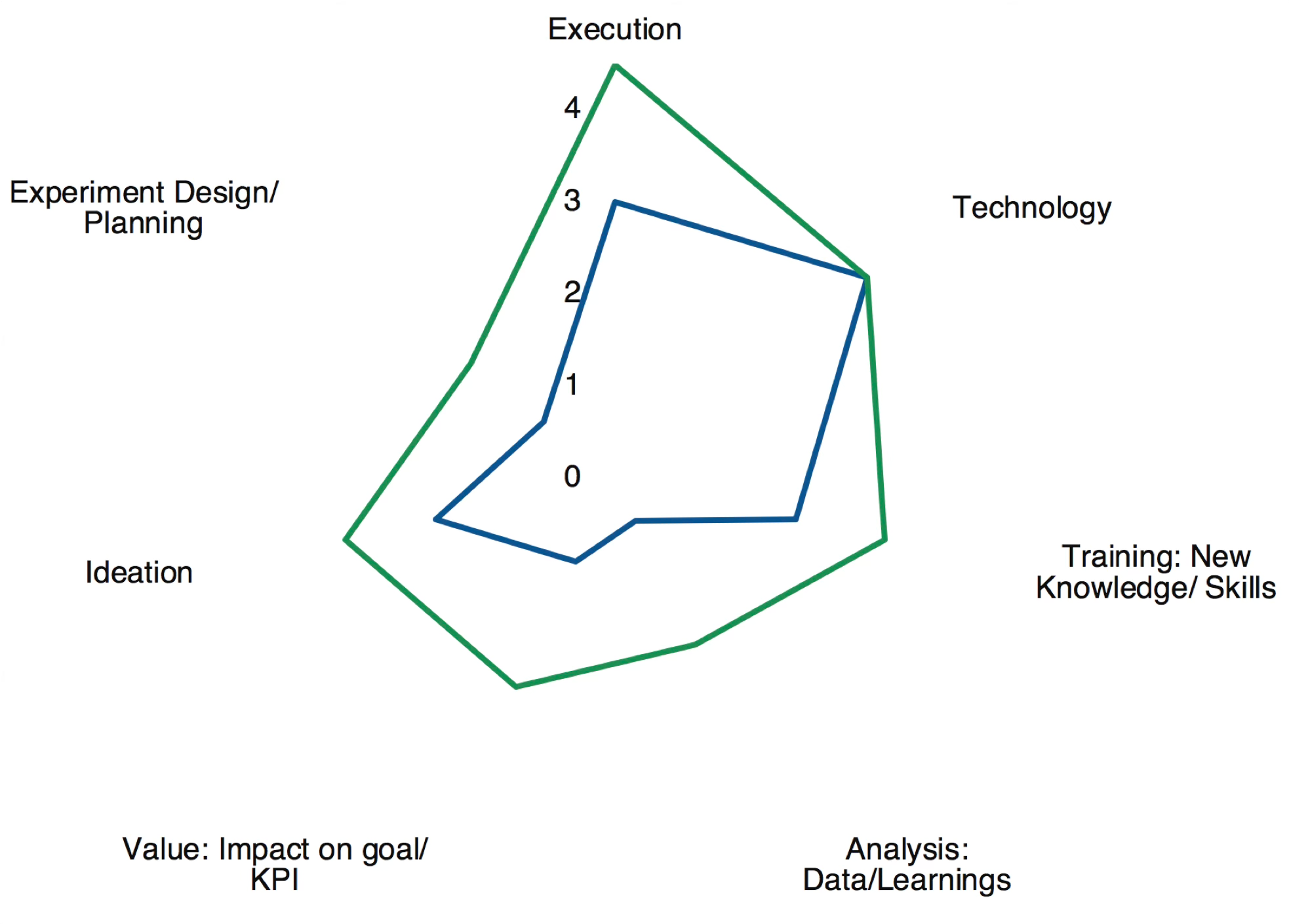

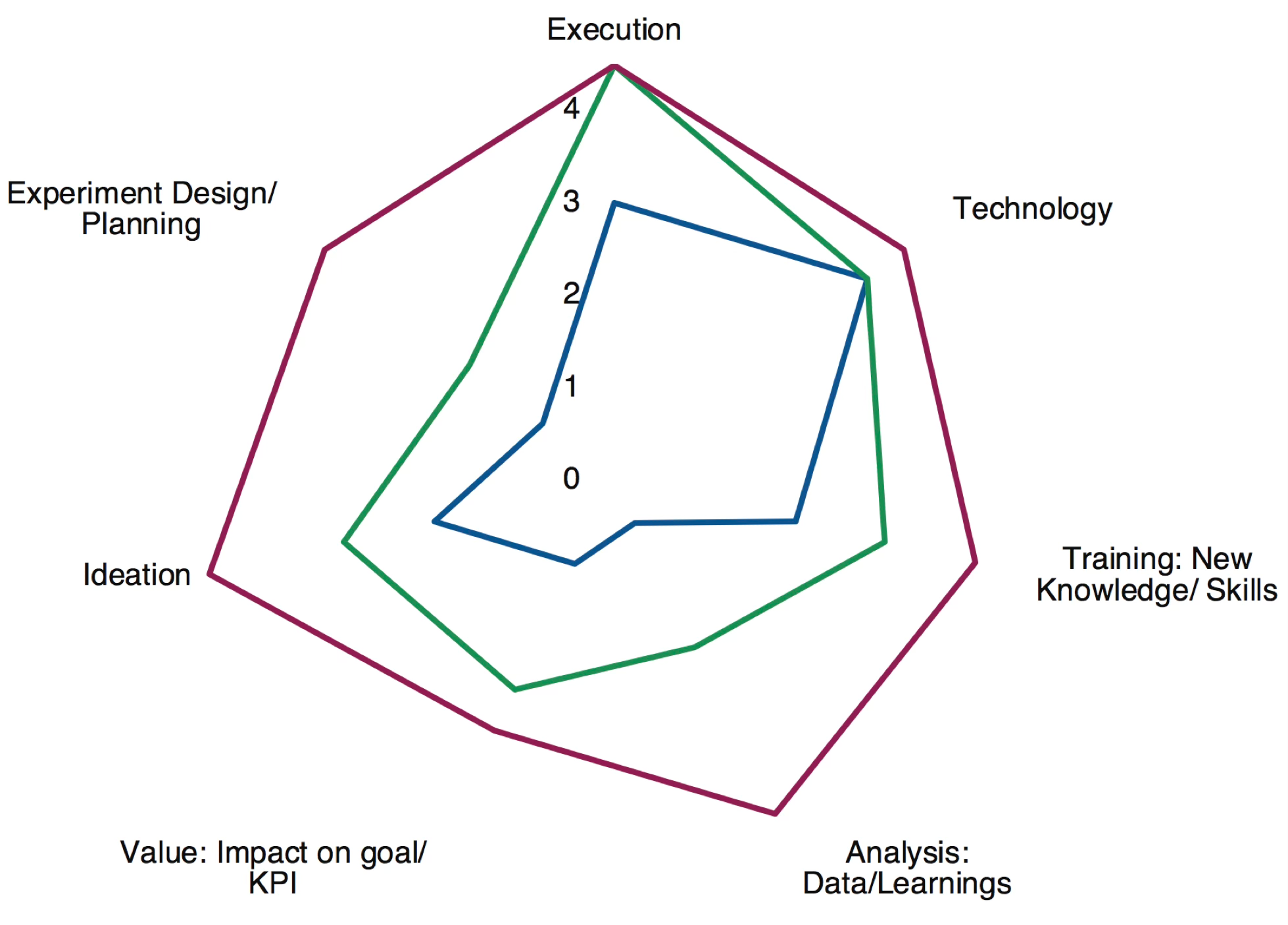

Using a simple spider scorecard, I work with our teams to reflect where they need additional help

For all the areas where they score 1 out of 5, we focus to bump that up to a 2 or 3.

Low Maturity: The team biases towards their comfort zones: technical skills and execution/delivery.

Medium Maturity: The team starts to focus more on analytics learnings and quality of ideation, leading to more impact on their goal.

High maturity: The team invests heavily in training (whole team), analysis & product insights, and experiments with planning/process models and ideation techniques

In summary

Treat optimisation like a start-up inside your business

Get the experimentation mindset right

Enable experimentation by enabling the teams running experiments

Measure and fix!

Need more ideas and tools you can use to improve experimentation, or want to try them out?

Book a coaching session with me from the button to the right!

About Ellie

.webp)

If you enjoyed Ellie's talk or are making use of the resources here and want to know how you can implement them, please get in touch: connect with Ellie on Linkedin or use our contact form.

Ellie has over 13 years experience in the data and experimentation industry.

In that time, she has helped businesses to ship experiments at scale, grow their data and product capability and create more value from their experimentation programme.

She is the Head of Consulting at Eclipse, an experimentation-focussed agency in the UK.